Apache Zeppelin

In this post I would like to show you a tool called Apache Zeppelin.

Zeppelin is an Apache project meant to be a notebook live environment that can run multiple languages like Python and Scala or tools like Apache Spark, Ignite etc. each one of these ability is called Interpreter. In there own words - "Web-based notebook that enables data-driven, interactive data analytics and collaborative documents with SQL, Scala and more".

Running Apache Zeppelin is very simple, just download the zipped binaries from the download section of the Zeppelin project page - here.

On Linux, in terminal from the Zeppelin home folder, run:

This will start the web server that is the GUI for Zeppelin.

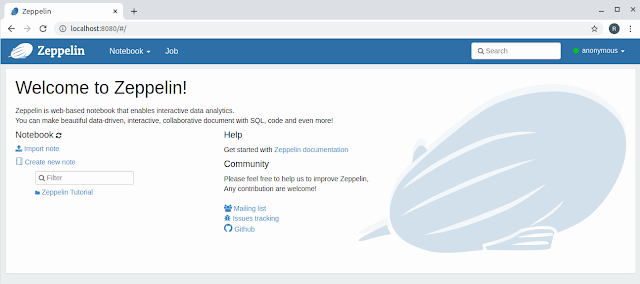

The main screen shows you the available notebooks.

Lets create a new notebook by clicking Create new note. After entering the notebook name and pressing Create.

Alternatively you can click on one of the existing notebooks to open and displays.

A notebook is a set of paragraphs that can use different interpreters:

Pressing the play button or Shift+Enter will execute the paragraph and display it output bellow it.

The %pyspark will start a spark session in Spark standalone (supplied with Zeppelin) or in Spark cluster if you specify one and then execute the code.

These are just the very basics of Apache Zeppelin, there are additional interrupters and more features. That we explore in next posts.

Zeppelin is an Apache project meant to be a notebook live environment that can run multiple languages like Python and Scala or tools like Apache Spark, Ignite etc. each one of these ability is called Interpreter. In there own words - "Web-based notebook that enables data-driven, interactive data analytics and collaborative documents with SQL, Scala and more".

Running Apache Zeppelin is very simple, just download the zipped binaries from the download section of the Zeppelin project page - here.

On Linux, in terminal from the Zeppelin home folder, run:

$ zeppelin-0.8.2-bin-all ./bin/zeppelin.sh

The main screen shows you the available notebooks.

Lets create a new notebook by clicking Create new note. After entering the notebook name and pressing Create.

Alternatively you can click on one of the existing notebooks to open and displays.

A notebook is a set of paragraphs that can use different interpreters:

Python

The line starts with % sign indicates what interpreter Zeppelin will use to run this code. For example %python will tell Zeppelin to execute the following code using Python interpreter. Lets try to add the code:%python print("hello world")

PySpark

Create a new paragraph and enter the following code:%pyspark list_of_persons = [('Arike', 28, 78.6), ('Bob', 32, 45.32), ('Corry', 65, 98.47)] df = sc.parallelize(list_of_persons).toDF(['name', 'age', 'score']) df.printSchema() df.show()

Angular

Another example for a useful interrupter is %angular that render angular code. |

| Modified from: https://www.w3schools.com/angular/ |

These are just the very basics of Apache Zeppelin, there are additional interrupters and more features. That we explore in next posts.

Comments

Post a Comment